At NCACE we are committed to evaluating our work not just for accountability to our partners, but also to inform continuous improvements of our activity. We are particularly interested in understanding how our approach is making progress in facilitating and supporting capacity for Knowledge Exchange between Higher Education Institutions (HEIs) and arts and culture organisations (ACOs), and also in understanding how this approach can be further improved and evolve to support future developments.

We see “Evaluation as a valuable catalyst for the effectiveness and efficiency of NCACE”, and this is reflected in our vision for evaluation, which emphasizes formative evaluation or “evaluation for improvement”. In that respect, we put emphasis on learning, improvement and identification of strengths and weaknesses, mainly from the perspective of beneficiaries and key stakeholders (Patton, 2005). The aim is to learn how to be more effective and improve or change things that are not working effectively. In short, as valuable as possible.

Our purpose is to enable the development and the ongoing evaluation of both the processes and activities underpinning NCACE and its results (outputs and outcomes). Being catalytic and ground-breaking in supporting collaboration between Higher Education and the arts and culture sectors is at the heart of our ambition at NCACE. Aligned with this ambition, NCACE evaluation supports an ongoing dialogue and integration across each area of work (Work Package), to ensure effectiveness and efficiency in programme co-design and delivery. NCACE evaluation is on programme and effectiveness evaluation[1]. This combines performance and value-based evaluation standards, focusing on both individual and organisational levels by setting and evaluating specific desired results or outcomes, performance indicators (KPIs), and performance targets. Emphasis is given to methodological pluralism, participatory action research (e.g. engaging our Sounding Board members, regional partners and other key stakeholders), empowerment evaluation (e.g. micro-commissions project evaluation), beneficiary-focused research (e.g. HEI and ACO surveys), and formative feedback in decision making, action research and internal evaluation.

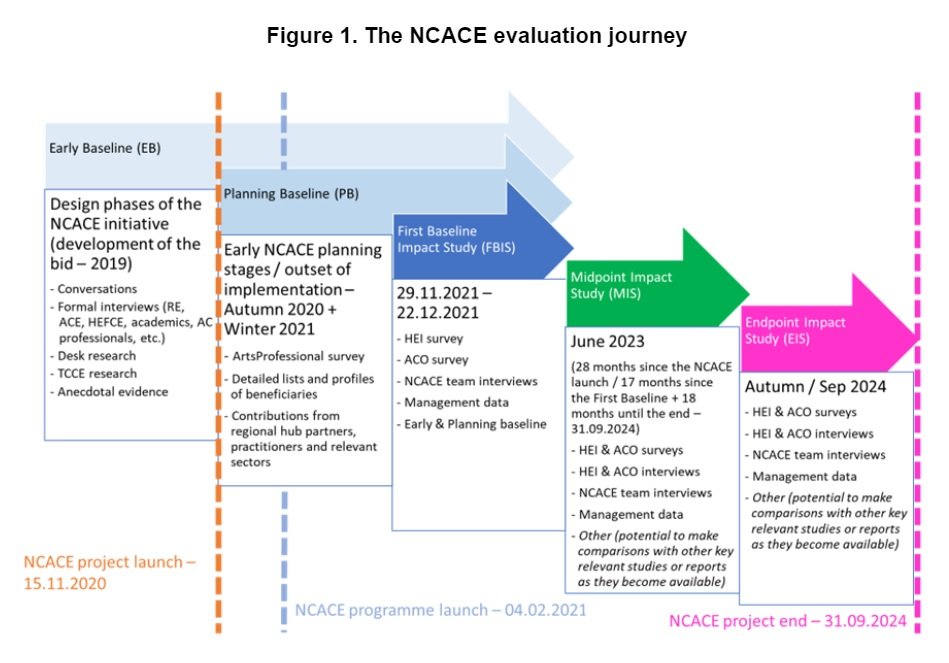

Reflecting the nature of our bold and complex initiative to establish the National Centre for Academic and Cultural Exchange (NCACE), our evaluation strategy included a composite baseline evaluation approach. This meant that the NCACE baseline position has been collectively and progressively developed with three baseline elements: an early baseline (during the NCACE design phase and the bid development); a planning baseline (at the outset of implementation, in autumn 2020/winter 2021); and a first baseline impact study (in autumn/winter 2021). Insights from the latter baseline position (first baseline impact study) form the basis for comparison with the midpoint and the endpoint positions (see Figure 1).

The First Baseline Impact Study (FBIS), and the relevant report produced as part of that, marked the completion of the composite NCACE baseline position. In addition to setting the baseline for comparison with the midpoint and the endpoint positions, the FBIS presented early (first 10 months) NCACE outputs and outcomes, as well as HEI and ACO emerging perspectives on the value of these outputs and outcomes. Importantly, the period of 10 months between the NCACE launch event (4 February 2021) and the point of FBIS primary data collection (referred to as the “FBIS point” – December 2021) is considered a rather limited time for potential progress towards NCACE outcomes to accumulate and become clearly noticeable. In addition, the impacts of the Covid-19 pandemic in both the HEI and ACO sectors, as well as the wider society and economy in the UK and around the world, were still very evident in the first 10 months of the NCACE programme of activities. Despite this, some very useful insights were captured and supported us in developing further our activities and the NCACE programme. More specifically, the FBIS report captured the first baseline impact of NCACE that has been used both in formative evaluation (feeding-forward and improving future planning) as well as summative evaluation. The report supported summative evaluation by demonstrating achievements at the FBIS point to external audiences and funders, but also setting the baseline for comparison with the endpoint impact study that will be performed at the end of the life of the NCACE project (Sep 2023). This will demonstrate the ultimate results at the final point of the NCACE project evaluation.

Formative evaluation has been applied by sharing the FBIS report findings with the NCACE team and encouraging them to critically review, discuss, reflect, and use the findings in the Centre’s future activities This was achieved through a series of focused internal email communications and Key Performance Indicator review meetings, where the Evaluation Lead acted as a facilitator, to review progress on goals and on the effectiveness of the KPIs themselves. A key milestone was our Connectivity and Integration Workshop, where all the Centre’s team convened and discussed reflections on the performance of their respective Work Packages and reviewed how each connects, integrates, and co-creates value for NCACE. The aims of this workshop were to: provide WP teams with opportunity for reflection and learning relevant to their WP activities and results; stimulate dialogue and clarify connectivity across all WPs; and support the formative evaluation of the NCACE FBIS report.

Our next key evaluation milestone for NCACE is the midpoint evaluation. The purpose of the midpoint evaluation is to capture the value co-created by the NCACE initiatives; identify lessons learned to inform improvement of implementation; inform funders about the progress made; and potentially support the rationale for continuation and further funding. Faithful to the spirit of co-creation, we recently invited key stakeholders to participate in two consultation meetings (Evaluation Advisory Meetings), where we shared and discussed our ideas and proposals for the midpoint evaluation methodology. The meetings were valuable in shaping the final methodological approach and providing useful insights for the design of effective data collection tools. Our intention to keep the midpoint evaluation relevant, focused, and short was well received by all advisory meeting participants. The aim is to prioritise the NCACE bid KPIs and ensure that we enable a comparative analysis with the baseline on important aspects of our work. Furthermore, the consultations highlighted that we need to focus on what evaluation insights our key evaluation audiences need or want to hear from us. This way our evaluation efforts, particularly in reporting evaluation findings, would be more efficient. From a strategic perspective, the midpoint evaluation is seen as a key tool for developing the strategic narrative that will help us advocate for the future development and sustainability of the Centre.

An important parameter in our discussions has been our interest in capturing evaluation data on the performance of NCACE in facilitating or enabling the co-creation of knowledge exchange value and the creation of a knowledge exchange community. With that in mind, it was agreed that collecting more meaningful and relevant data will require both quantitative and qualitative approaches. The former will involve open and targeted surveys. The open surveys will include separate surveys with HEIs and ACOs to capture the wider KE-related insights on wider developments in the field. The targeted surveys will invite a selected audience of HEIs and ACOs, based on their engagement with NCACE events and their role in knowledge exchange. In regard to our qualitative approach, the value of narrative data has been at the forefront of the discussions in all our midpoint related NCACE team meetings, as well as in the two Evaluation Advisory Meetings. The consensus has always been that narrative data will provide rich opportunities for deep understanding of the NCACE performance and the results achieved at midpoint. Our experience with narrative data collected (mostly via Padlet and online chats) during past NCACE events provides ample evidence and confirms the richness and value that this data can generate. Narrative data for the midpoint evaluation will be generated via semi-structured interviews with key NCACE stakeholders.

Our evaluation methodology includes the ongoing (since the NCACE launch) collection of key management data that inform several NCACE bid KPIs. We have been regularly checking our systems to make sure that we continuously develop this important dataset, which will also inform our midpoint evaluation. Finally, the NCACE midpoint position in terms of the performance of our Evaluation Work Package (WP5) will be informed by interviews with the core NCACE team. The findings will then be compared with those from similar interviews conducted at the baseline point.

Our data collection activities for the midpoint evaluation have kicked off this week and will continue throughout June 2023. We invite you to participate in our surveys, one aimed at people working within Higher Education and the other aimed at people working in or with the arts and cultural sector. We would really value your contributions and your support.

The survey links are here:

NCACE Midpoint Survey (for people working in arts and cultural organisations)

NCACE Midpoint Survey (for people working in HEIs)

Image: Author's own

Dr Thanasis Spyriadis

NCACE Evaluation Lead

thanasis@tcce.co.uk

__________________________________________________________________

[1] Programme evaluation determines current and desired results or outcomes and their use. Effectiveness evaluation determines the extent to which the NCACE programme meets its stated goals and objectives.